De-indexing is an SEO practice that allows to indicate to search engines the removal or disrepute of certain pages. This can be content that is deemed to be of low quality (due to lack of text) or content that is considered private and should not appear in search engine results. In simple terms, it allows to remove from Google all the pages that are useless to the Internet user. The implementation of this process makes the pages of your website reliable and qualitative in the eyes of Google

More than 10 million pieces of content are published daily on the web, which is growing day by day

To stand out from this mass, it is important to review your SEO strategy which includes several kinds of techniques, including de-indexing. So:

- De-indexing: What is it?

- What are the different methods of deindexing?

- How and when does Google deindex web pages?

These are some of the questions I will answer in this guide.

Chapter 1: Deindexing, what is it?

To create, it is sometimes necessary to destroy, they say! The desire to see one’s page featured at the top of the search engine results, pushes SEOs to make valuable adjustments.

But before we get to the notion of de-indexing, it is important that you have an idea of what indexing is.

1.1) What is SEO indexing?

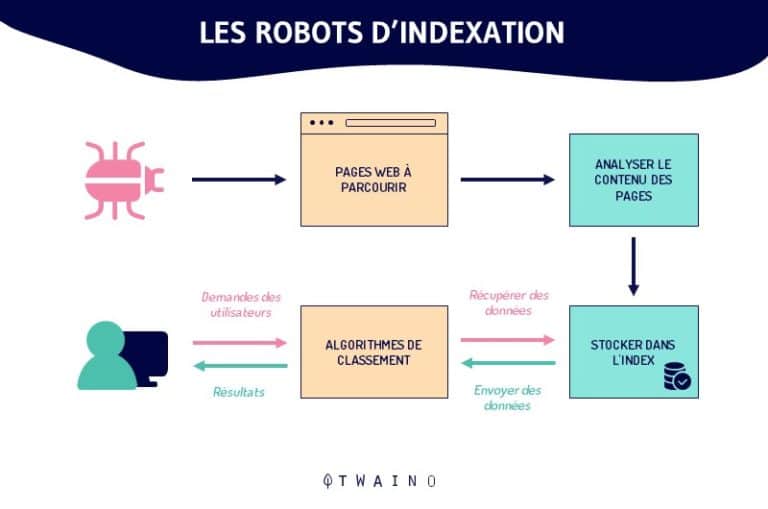

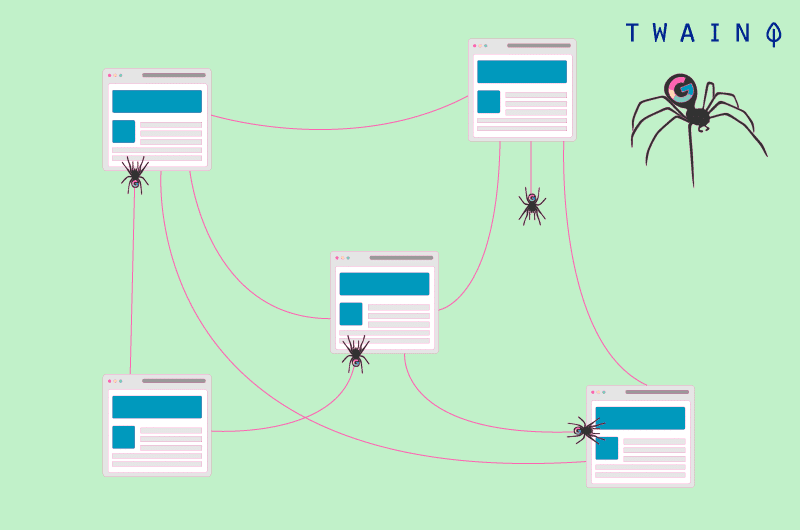

Historically referred to as the “registration phase of a website”, l’indexing Is the set of processes by which a Google robot (spiders) elaborates, treats, then classifies the contents and pages of a website to then present them on a results page.

Without this intervention, the sites searched by the Internet users will not be displayed

Indeed, indexing is a part of natural referencing aiming at putting in the index of a search engine the pages of a website. It is one of the prerequisites for the fair positioning of a site in the results pages of a search engine

1.2. The time it can take for Google to index a page

A website is not immediately indexed by Google once online. Indeed, it can happen that the robots in charge of the exploration of sites and pages are not yet at the level of your site, which often takes time

So, to speed things up, you can make indexing easier for the search engine robots by

- Correcting the factors that block indexing by Google;

- Requesting requesting a URL inspection by the Google Search Console ;

- Performing a daily check of existing crawl reports on Search Console to detect and correct any problems;

- Creating new content that proves your site is growing;

- Structuring each page in such a way that it is easier for the robots to navigate.

Thus, you need to implement certain strategies so that your website is indexed efficiently by the bots

This starts with removing pages that do not comply with Google’s rules from your website.

1.3. What does Deindexing mean?

Since the appearance of Panda which is an algorithmic filter which punishes websites that have proposed content of low quality, it has become very important to present to search engines only those pages that have some value to Internet users

Although de-indexing was already in use, it will be Google’s penalizing regulations regarding quality content that will lead many to discover a new compartment of the SEO world: The de-indexing of low quality content

This expression created as a counterpart to the word ” indexing this expression, created as a counterpart to the word “indexing”, represents all the procedures implemented to remove from the index of search engines certain web pages in order to present those of better quality

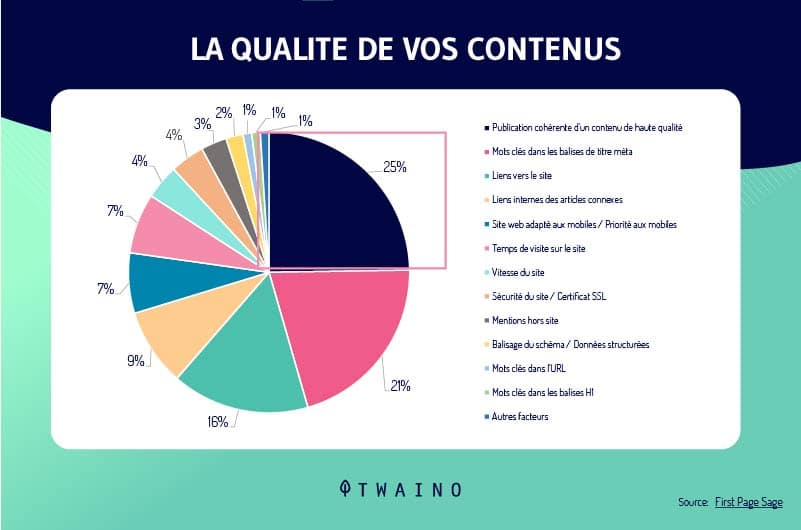

To properly satisfy its users, Google pushes SEOs to produce only content that provides real added value. This sometimes involves the removal of inconclusive content. Among the latter, we can distinguish :

1.3.1. Internal engine results pages

It is normal for an internal search engine to become necessary when a website starts to grow in terms of the number of pages with very little value content

These pages should be de-indexed, not because they contain spambut because they may waste the crawl budget. As a consequence, Google could spend its time crawling the internal results pages and could neglect the content pages of the site.

Indeed, the internal engine results pages are the low quality pages in terms of content. For more clarification, follow this video YouTube on the subject: https://youtu.be/k-MmQS98bCE

1.3.2. Duplicate content pages

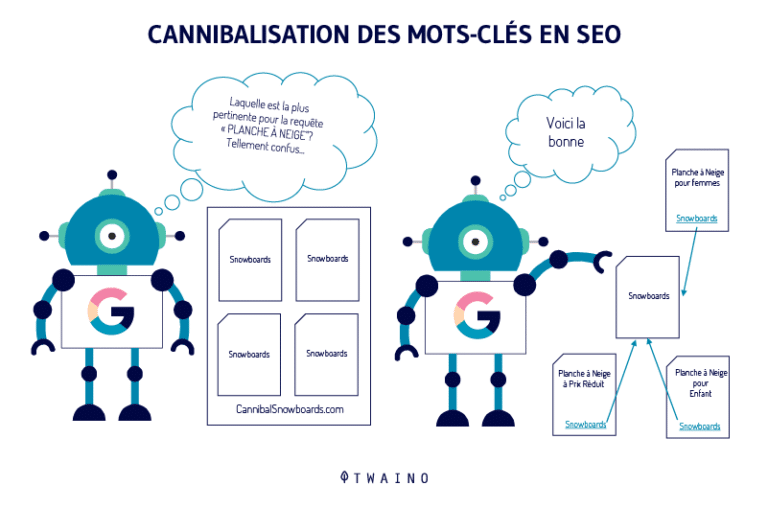

The duplicate content refers to pages that are duplicated internally or externally on a website. Indeed, it may be pages with very similar or identical content.

The search engine then filters its results to select the version they consider as theoriginal

The other pages are not necessarily de-indexed and can still keep their PageRank and their index on search engines. Even if search engines usually ignore them, these are pages that are crawled by bots

If you have a lot of these pages, they may consume a good part of your crawl budget. Note that there are three kinds of duplicate content:

- The totally identical pages

This is the example of mirror sites. Here, the Google search engine selects according to PageRank. The mirror site is the fact of reproduce textually another website

It is an exact copy of another site, so the pages that come from the mirror site are perfect copies of the pages of another website

Their role is to allow the dissemination of the same information in several places and then to better distribute the traffic generated on the original site

- Similar pages, but with different TITLE and META DESCRIPTION tags

In this case of duplicate content, all the original pages will be indexed and well positioned at the expense of those that are not. The ones that are not original will perform much worse in the results pages (SERP)

- Pages that are different, but have similar TITLE and META DESCRIPTION tags

It is the identical tags on these pages that make them similar. In the worst case, it is even possible that the content is not indexed by Google

To avoid this situation, it is necessary that all pages of your website are composed of title tags and meta description tags tags.

Here are some tips that will allow you to identify duplicate content:

- Do a search on Google with the syntax “…”: this is a very simple method, because it consists in check if sentences are repeated on several pages of your site or on the web. To get a satisfactory return, be specific in your searches. See my article on the 26 operators of Google.

- Use specialized tools: These tools are sites whose the specificity is to detect duplicate content. We have Copyscape, Quetext, Screaming Frog, Duplichecker, Siteliner. They are quick and easy to use, you just need to indicate the URL of the page and the tool will take care of detecting duplicate content.

1.3.3. Non-conforming forms

Low quality content can also concern certain forms. There are in the first place :

- Forms that lead to content that is already available on another indexed page.

- Forms that lead to pages that do not necessarily display content. We have as examples, the case of purchase on an e-commerce site and the connection form to a member area.

1.3.4. Pages that offer spun content (repeated)

The content spinning is a technique that allows you to rewrite content several times in order to obtain different similar texts that speak about the same subject

This process, which has been developed by specialists, allows the writer to create an original text, and to obtain several other versions of different formulations, but all having the same meaning.

In addition to the work of the writer, it is also necessary to integrate the use of spinning software for the automatic production of text.

However, when content spinning is poorly developed by the writer, it can deteriorate into spun text or a spun of poor quality.

This then becomes a disorientation for the search engines and a disappointing experience for the readers. This is why these types of content should be de-indexed.

1.3.5. Pages from the import of a demo theme

These are pages created automatically during the import of a demo theme. Useless pages that should be de-indexed.

1.3.6. Other pages to be de-indexed

In addition to the 05 types of content listed so far, to be de-indexed as soon as possible, we can add :

- The PDF which offer contents similar to an HTML page;

- The seasonal contents, obsolete pagesold services that are no longer offered;

- Everything that represents pre-prod as well as confidential information.

In all, the contents that do not bring an effective added value must be deindexed

Chapter 2: De-indexing methods and techniques

De-indexing can be done using several techniques. These vary depending on whether or not you want to keep content available that you want to make accessible to readers

If you want to remove or delete pages from the Google index, you will have to take specific actions

To avoid confusion, it is important to understand the distinction between banning crawling to Google and de-indexing.

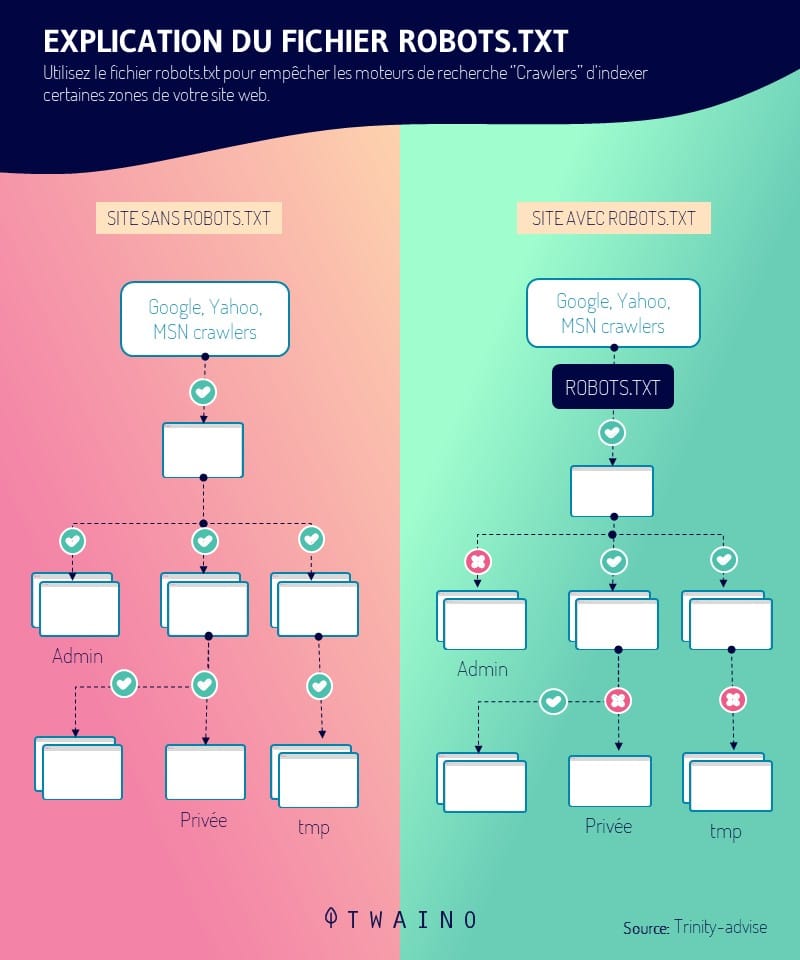

Indeed, adding a command ”disallow” in robot.txt is not intended to deindex pages, but rather to prevent Google crawling pages. This option in robots.txt is not used to deindex pages

The de-indexing techniques I will present to you are all effective. However, it is useless to use all the techniques at the same time:

2.1. Using the robots meta tag

The meta robots tag is located between the tags. It allows you to indicate the guidelines for indexing a site content. For de-indexing, you have two options:

- Deindex the content, while still keeping the links in it accessible. This is recommended when you want to de-index a content without removing its links.

- Desindex pages, while preventing links from being followed:

The next step is to create a sitemap of deindexation, submit it to Google through the robots.txt following the “sitemap” directive in addition to the entire URL of access to the site through the SEARCH CONSOLE.

2.2. Use of the X-Robots-Tag command : Noindex

This method is used to de-index files or pages without changing their source code:

- PDF deindexing:

- Deindexing images and files:

It is important to remember in all cases, that you should not use two methods at once. This will make your deindexing ineffective. For more information, see : what is the robots.txt file and how to use it?

2.3. Deindexing unnecessary pages

To de-index a lot of useless pages quickly, you have to :

- Provide an HTTP 410 code code or HTTP 404 code on the deleted pages. After, you have to wait for Google to take into account the removal of the page.

- Generate a sitemap of de-indexation in which we find the old deleted URLs which refer to a 404 or a 410.

2.4. Deindex old pages at the expense of new ones which are more informative

Here’s how to do it, if you’re thinking of de-indexing pages, because others are more relevant

- Develop redirects 301 from pages deemed less relevant to the new ones. The 301 redirect is recommended especially in the case where the pages considered less relevant are subject to backlinks. This promotes the optimization of the pages and reduces the loss of linkjuice.

- In order for Google to take these 301’s into account more quickly, it is recommended to create a sitemap in which the URL’s redirecting to the new pages are found and submit it via robots.txt or a SEARCH console account.

Besides the fact of wanting to deindex pages yourself, it happens that your pages are deindexed by Google without any order.

Chapter 3: How does Google de-index?

You have put in a lot of effort to get your website to the top of the search engine results pages. However, after all the search attempts, you can’t find your website in the search results

This is confusing! You will have to know first what would have caused such an inconvenience. Indeed, the mission for which Google operates is stated as follows “Organizing information from around the world to make it available and useful“.

The firm aims to offer its users the most reliable information

To succeed, Google strives to:

- Constantly adjust its search algorithm search algorithm ;

- Constantly ensuring that search results are of better quality through the evaluation data collected at several levels, including its evaluators.

So it is easy to understand why the giant does not hesitate to sanction certain practices that it considers harmful to its users

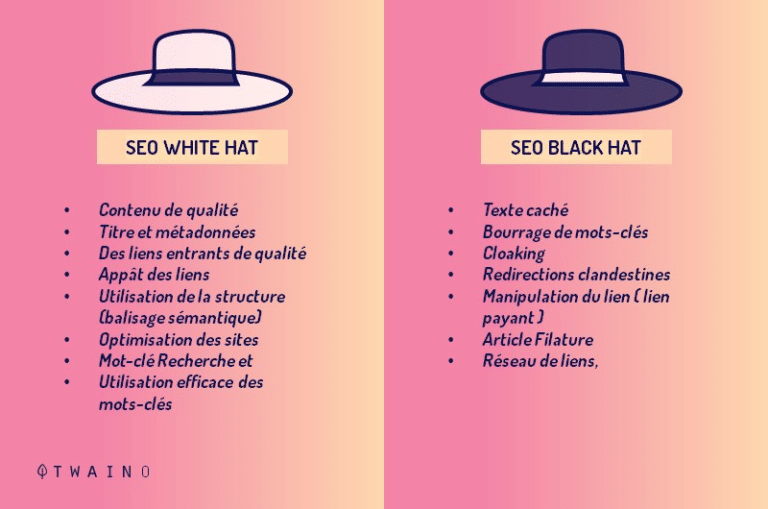

Here are the practices that are mostly black hat and that can cause your site to be deindexed by Google.

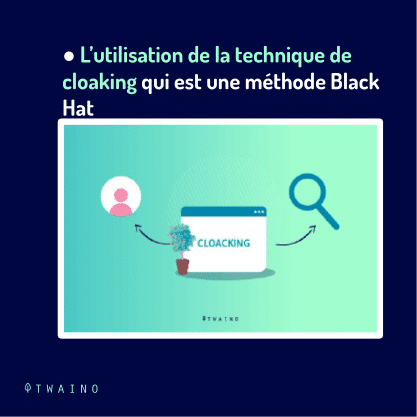

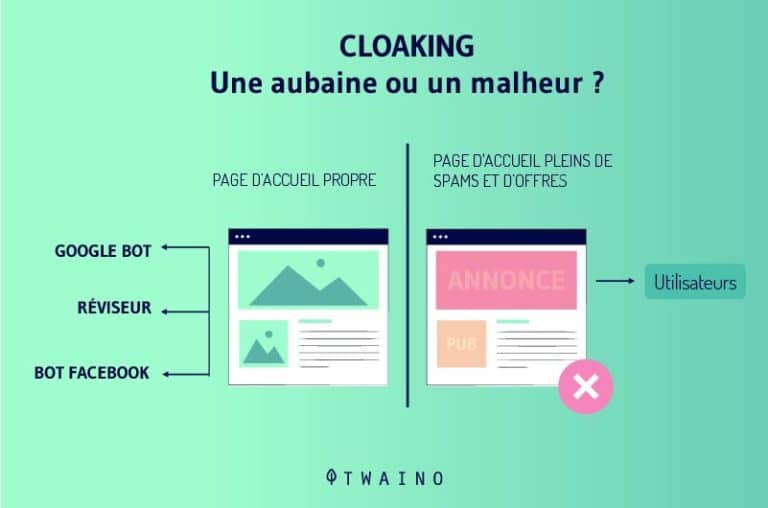

3.1. The cloaking technique

Cloaking is a Black Hat method to optimize the positions of sites in search engines

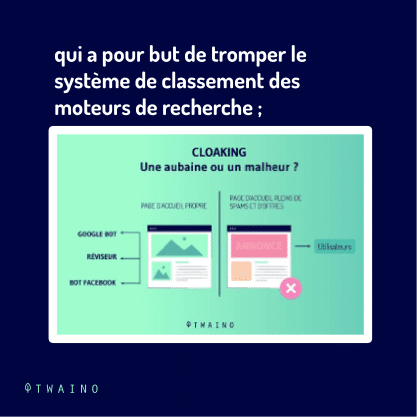

This practice consists in presenting to the search engine, a different content than the one presented to the Internet users. A real camouflage system.

For example, make your site appear as a site that shares celebrity reviews, but discreetly and secretly refer to extensive erotic (pornographic) content.

The camouflage is executed by publishing content from a user agent or even an IP address. In this way, if a crawler scans the system, it is the clean version of the of the site that is displayed.

On the other hand, if it is a human searcher that has been detected, it is the false version of the site that is displayed.

In addition to this, the masking can also substitute for

- Images that are camouflaged by other images, and that are different from the ones that were broadcasteds ;

- Websites showing some content to Google, but which limits the access to the Internet user ;

Not included are websites that require registration or login to access the content. Google applies two kinds of penalty for cloaking :

- The penalty that affects only partially your site;

- The penalty that affects your entire website.

3.2. Spamming

Still called unwanted contentit is content without value that is displayed for the purpose of advertising

In fact, Google has announced the forced de-indexation of spammy content, including

- Sending automatic requests to Google;

- Designing pages with malicious content such as: viruses, phishing, or malicious software;

- The use of affiliation without sufficient added value;

- Hidden links;

- Participation in link schemes.

There are also other types of spam:

3.2.1. User-generated spam

Spam is also created by users or bots who place comments containing contact information or links to websites or user profiles:

Source: Pipdig

If you would like to distinguish between spammy comments, you can consider

- User names ;

- The emails that look irrelevant or strange or that are simply not trustworthy

To avoid being penalized by Google, you must act quickly by deleting all spam comments and review the content on your site. To avoid spam, also consider this point :

3.2.2. Free hosting

It is common to find free hosting services. We advise you to be careful with these services, because they are really not reliable

In return for what you were promised, you will only see spam ads and bad service. Google is taking measures to sanction this practice

To avoid being penalized, you need to choose useful and reliable hosting. Paying for hosting is one of the best solutions that allow you to have full control over your website. Here is a solution that can help you: 27 criteria for choosing your web host?

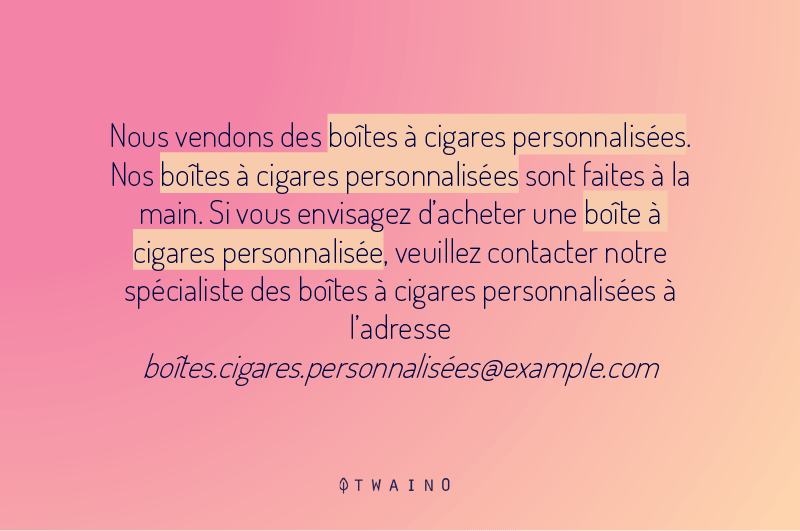

3.3. Keyword stuffing pages

The stuffing of keywords is a Black Hat SEO technique that consists in loading web pages with keywords to try to influence the ranking of a website on the search engine.

These web pages can include meta tags and meta description. The scope of this method can go up to include :

3.3.1. Adding irrelevant keywords

These are keywords that have no relation to the topic. For example, your website has content about furniture, but you add keywords about gardening just to attract more traffic. Google will not hesitate to punish this kind of page.

3.3.2. Excessive repetition of keywords

The giant search engine is totally against unnecessarily repeated keywords unnecessarily repeated. We can include the use of all types of keywords available.

For example, if the keywords are “furniture from Ethiopia”, avoid this: << The "ethiopian furniture” are the best on the market. You can find “the furniture from Ethiopia” in the stores whether it is online or in person. “Ethiopian furniture of Ethiopia“are comfortable and soft. >>

To find out if your content is packed with keywords, feel free to take a look at this little guide Definition Keyword Density l Twaino.

3.3.3. Hidden texts

Another method of referencing more or less less ancient is the use of keywords on a website whose font is :

- Similar to the background of the website;

- Too small, impossible to read with the naked eye.

In any case Google penalizes when it notices the deception.

3.4. Light content

Specialists say it all the time, write a quality content is not an easy task. Even if this criterion can be met if you are an excellent writer, the problem will remain the frequency of publication

Indeed, you have to publish quality content, but on a regular basis. If you only publish an article once a month, you are less likely to perform than someone who publishes several times a month

To take advantage of the benefits of regular publication, some people do not hesitate to publish any type of content that comes their way.

Other people use a shortcut that consists in copying all or part of the content (duplicate content as seen before). Such content is considered by Google as lacking any originality and offering little added value to its users

By giving them very little interest in the SERPs, the search engine can easily de-index these contents or the entire site.

Chapter 4: Other questions about de-indexing

4.1. What does it mean to index a page?

In simple terms, indexing is the process of adding web pages to Google’s search database. Depending on the Meta tag you used (index or NO-index), Google will crawl and index your pages or not. A NO-index tag means that this page will not be added to the web search index.

4.2. What does de-indexed mean?

In a few words, de-indexed means: Remove from an index or from the whole indexing system

4.3. How to deindex a page?

Firstly, you can use a meta robots tag to ban access. Second, you can make an instant request in theuRL removal tool of Google Search Console

At the end, don’t forget to check if the pages are deindexed.

4.how can I stop Google from indexing my site?

The most effective and simple way to prevent Google from indexing certain web pages is the “noindex” meta tag. Basically, it is a directive that tells Google’s robots not to index a web page, and therefore not to display it in the SERPs.

4.5. What is a noindex tag?

A “noindex” tag tells search engines not to include the page in their index and therefore the search results. The most common method of not indexing a page is to add a tag in the head section of the HTML or in the response headers

In summary

The optimization of a website on search engines depends on one or more factors. Indexing is a phase of referencing that allows search engines to display the contents of websites in their results

The de-indexation on the other hand mobilizes various techniques consisting in removing from the index certain contents judged of low quality or private in order to make the site more qualitative

The de-indexing techniques are numerous, but some have been cited and detailed in this article. I hope it will have helped you or brought you some more tips. See you soon!

1 thought on “Deindexing”