Disallow is a directive that webmasters use to manage search engine crawlers. The main purpose of this directive is to tell these bots in a Robots.txt file not to crawl the specific page or file on a website. On the other hand, we have the Allow directive which tells the crawlers that they can access these same elements

Creating quality content and backlinks are the popular techniques for ranking a site. While these techniques dominate, there are many other techniques that contribute to a website’s SEO.

Most of these techniques are unfortunately not well known, although some of them are real assets for referencing a site.

This is the case of the robots.txt file, a file that tells search engines through several directives, how to explore the pages of a website. Among these directives, the Disallow stands out. It is a powerful tool that can influence the referencing of a site.

Through this article, we will discover together the Disallow directive and its usefulness for SEO. Finally, we will explore some best practices to avoid mistakes during its use.

Chapter 1: What is the Disallow directive and how is it useful in SEO?

The Disallow directive is a legitimate SEO practice that many webmasters already use. This chapter is dedicated to the definition of this directive and its importance in the SEO world.

1. What is the Disallow directive?

Like other tags such as Nofollow, the Disallow directive influences the behavior of web crawlers such as Googlebot and Bingbot towards certain sections of a website.

These robots work as internet archivists and collect the contents of the web to catalog them. To do this, they scan all websites to discover new pages and index them.

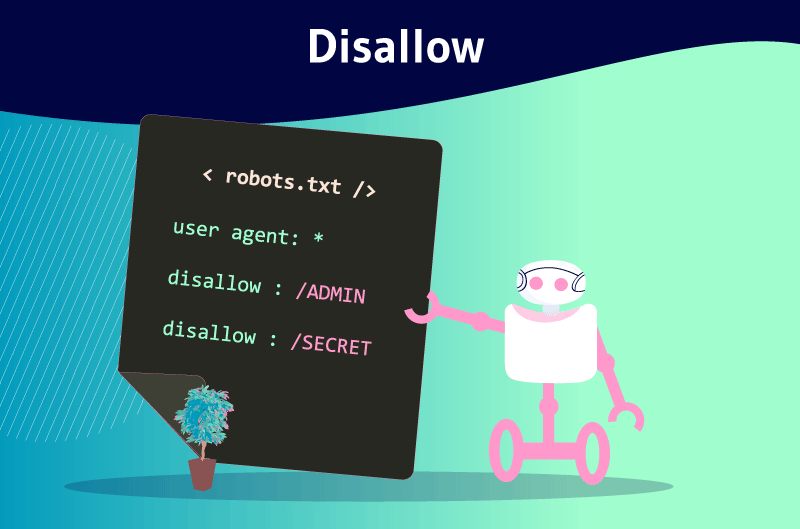

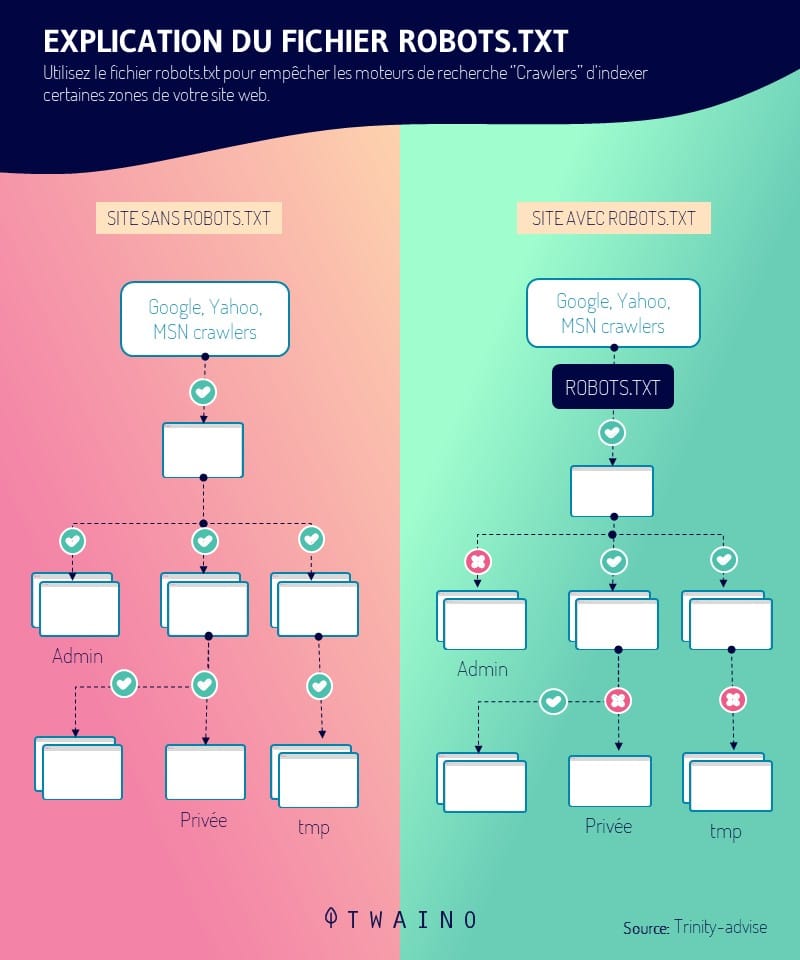

The webmasters manage to give various instructions to the crawlers that access their sites through a robots.txt file. The Disallow directive is one of these instructions.

It allows webmasters to block access to particular resources on a website. As a result, web crawlers will not be able to crawl URLs that are blocked using the Disallow directive.

This directive is sometimes used in conjunction with the allow directive. Unlike the Disallow directive, it gives the crawlers access and tells them which resources they should crawl.

It should be remembered that only search engine bots understand the language used in the robot.txt file. Bots that do not use this language, such as malicious bots, can therefore access the resources blocked by the Disallow directive.

1.2) Where is the Disallow directive located?

The Disallow directive is included in a robots.txt file as mentioned above. This file is located in the root of a site at the first level (www.votresite.com/robots.txt).

Bots can only find the robots.txt file in the location mentioned above. The correct name is robots.txt and other writings such as Robots.txt or robots.TXT are simply ignored.

The robots.txt file has other directives which are:

- Disallow ;

- Deny ;

- Order ;

- Etc.

The robots.txt file remains publicly accessible and can be consulted for any web site. It appears when you add “/robots.txt” at the end of a domain. This allows you to see all the directives of a site when it has this file.

The link to open in your browser looks like this: www.votresite.com/robots.txt. When the robots.txt file is not at this address, the bots assume that the site does not have such a file.

1.3. What is the importance of the Disallow directive?

The Disallow directive can be used for many reasons. Along with the other directives in the robots.txt file, it helps to improve the ranking of a site.

These directives help direct crawlers to resources and crawl only those that are useful.

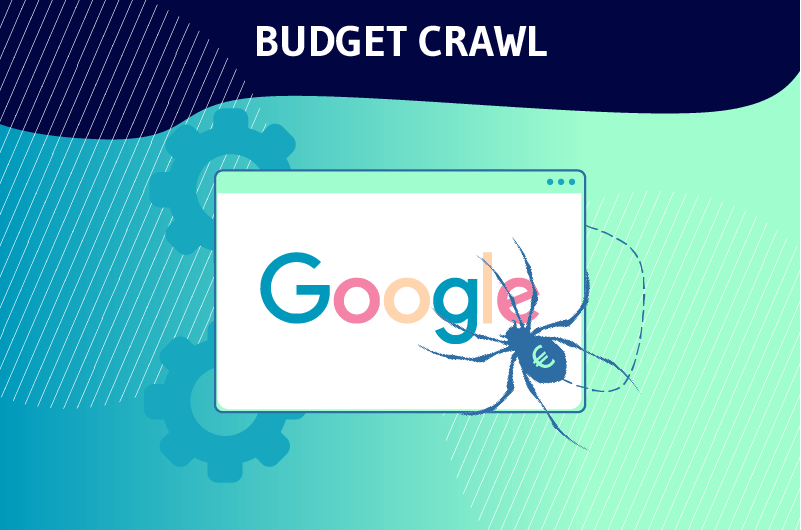

1.3.1. Optimizing the crawl budget

The Disallow directive is often used to prohibit crawlers from exploring pages that have no real interest for the SEO of a website. This is what Google tries to explain in the following passage:

“You don’t want your server to be overwhelmed by Google’s crawler or to waste its crawling budget on unimportant or similar pages on your site.“

In simple terms, Google allocates a so-called crawl budget to its bots for each website. This budget is the number of URLs that Googlebots can crawl on the site.

But when the bots arrive at a site, they start crawling each of its pages. The more pages a site has, the longer it will take to crawl.

That’s why you need to help bots ignore unimportant pages with the Disallow directive and direct them with Allow to the important pages that deserve to be ranked.

That said, by putting good instructions in the robots.txt file with Disallow, webmasters help bots spend the crawl budget wisely. This is one of the reasons why the Disallow directive is particularly useful for SEO.

In addition, the Disallow directive keeps sections of a website private and helps prevent server overload. It also allows you to prevent certain resources such as images or videos from appearing in search results.

1.3.2. Does the Disallow directive prevent a page from being indexed?

The Disallow directive does not prevent a page from being indexed, but rather prevents it from being crawled.

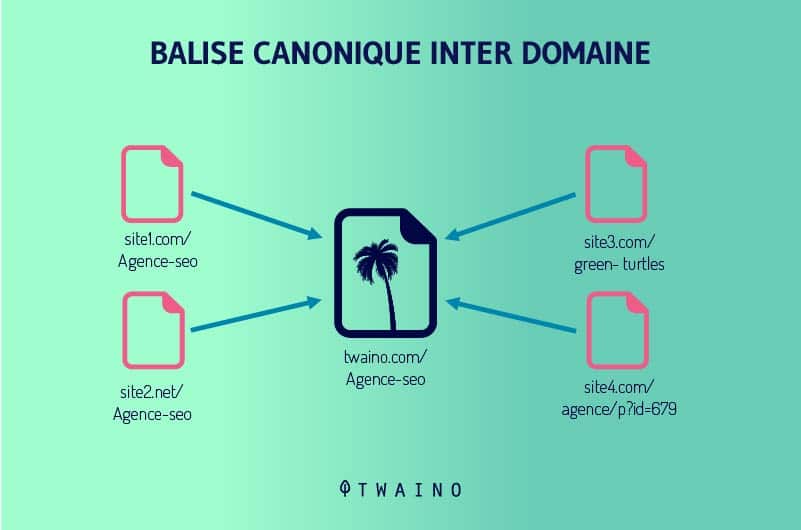

Therefore, a URL blocked with Disallow may appear in search results when Google finds content relevant to a query. It can also be ranked when there are backlinks and canonical tags that point to it.

However, Google will only be able to display information other than the URL of the page. The latter appears instead of the title. For the meta description, the search engine shows a message that it is unavailable due to a robots.txt file.

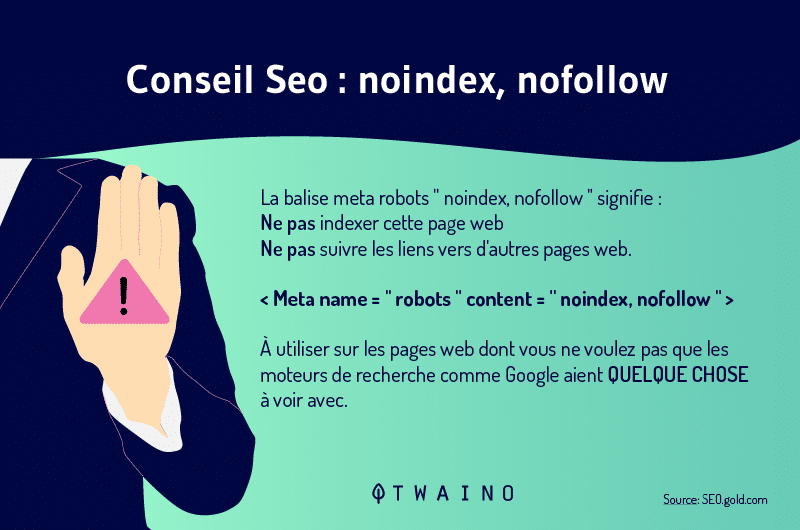

To prevent search engines from indexing a web pagethe Noindex tag is used. It tells them not to consider the resource in question in the SERPs.

It should also be remembered that the Disallow and Noindex directives can be combined. The Noindex tag will allow you to put some of your pages out of the index and avoid problems like duplicate content.

Note that this tag is different from Nofollow, a tag that is used when you want to tell Google to index the page, but not to follow the links on this page.

The Noindex and Nofollow tags can be combined when you do not want the page in question to be indexed and its links should not be followed.

Chapter 2: Forms of application of Disallow and best practices to avoid errors

The application of the Disallow directive is particularly simple and requires only a mastery of syntax and general characters. This chapter discusses the forms of application of the Disallow directive and good practices for avoiding errors.

2.1. Agent-users to specify search engines

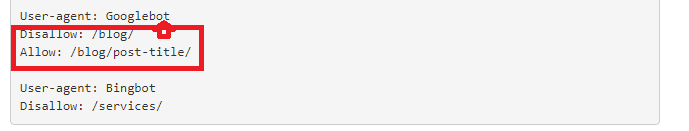

To apply the Disallow directive and the directives in the robots.txt file, you must start by setting the user-agents for which the message is intended. These are the bots that will consider the instructions for the directive.

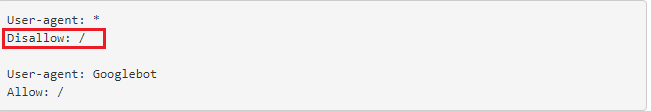

To mean that they are intended for all bots, we put the symbol (*) as below.

But if you want to address only Googlebots, you can do it as follows:

- user-agent: Googlebots

When addressing only Bingbots, we write :

- user-agent: Bingbots

The registration of a user-agent also marks the beginning of the instructions for a set of directives. Thus, all the directives between a first user-agent and a second user-agent are treated as the directives of the first agent.

Moreover, the asterisk (*) represents the set of possible characters while the dollar sign ($) corresponds to the way in which URLs are terminated. The pound sign (#) is used to start a comment.

Comments are only for humans and are not supported by robots. These three symbols are called wildcards and the mismanagement of these characters can cause problems to your site.

2.2. The different forms of Disallow application

The syntax of Disallow differs depending on the form of application.

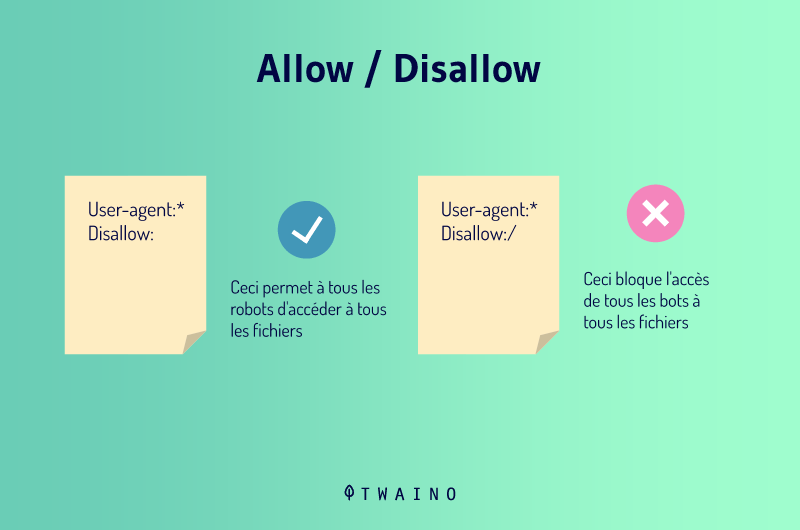

2.2.1. Disallow:

When you put Disallow: without adding anything after it, you tell the crawlers that there are no restrictions. This means that everything on your page is good to learn and the bots can explore everything.

You understand that this syntax is not useful since search engines will crawl your site anyway in its absence.

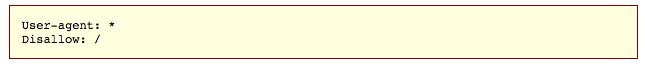

2.2.2. Disallow:/

This directive reads Disallow all and allows you to disallow an entire site. When you use this syntax, the robots of the defined user-agents will not be able to crawl anything on your site. This syntax can be used when a site is still under maintenance for example.

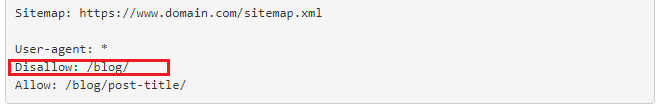

2.2.3. Disallow: blog

This code allows you to block access to all pages whose URL begins with blog. Therefore, all addresses starting with https://www.votresite.com/blog will simply be ignored during the exploration.

2.2.4. Disallow:/*.pdf

It is possible to disallow the crawling of a particular file type and that’s what this code does with PDF files. The crawlers will be able to ignore files of this type when exploring the following URLs:

- https://www.votresite.com/contrat.pdf

- https://www.votresite.com/blog/documents.pdf

It is also possible to add the symbol ($) to our syntax to indicate to the bots that all pages whose URLs end with (.pdf) should be ignored.

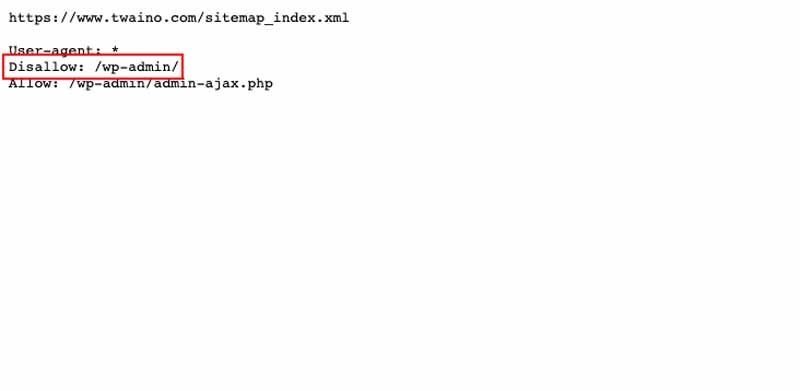

2.3. Disallow VS Allow

The Disallow and Allow directives can be used together in a robots.txt file. When used together, the use of wildcards could lead to conflicting instructions.

In addition, Google bots often execute the less restrictive directive when the instructions are unclear. But when the Disallow and Allow directives match the same URL in a robots.txt file, the longer instruction wins.

The length of the statement is the number of characters in the statement outside the directive. This is the path of the directive that the bots will follow. For the examples below, the second instruction will be executed.

- Disallow /example* (9 characters)

- Allow /example.htm $ (13 characters)

- Allow /*pdf$(6 characters)

2.4. Best practices to avoid errors

The wrong configuration of the robots.txt file or a wrong instruction of the Disallow directive can slow down the SEO performance of your site. That’s why it’s important to be careful not to block your resources by mistake.

On the other hand, you should not block sensitive data only with the Disallow directive. As mentioned before, a blocked page can still appear in search results and become public.

In addition, malicious bots that do not follow the robots.txt guidelines can crawl pages that are supposed to be protected using Disallow. Since the robots.txt file is public, anyone can look at it and find out what you are trying to hide.

As far as user-agents are concerned, it is necessary to know how to use them in order to be precise in recording the instructions of the different directives. Keep in mind that a search engine may have several bots when you define the agents.

As an example, Google uses Googlebot for organic search, but Googlebot-Image for image search.

Chapter 3: Other questions about the Disallow directive

In this chapter, we look at the questions that are regularly asked about the Disallow directive.

3.1) What is a robots.txt?

A robots.txt file gives instructions to search engines about your crawling preferences. This file is sensitive in the world of SEO and one small mistake can compromise an entire site.

But when used wisely, it sends good signals to Google and helps your site rank well. To edit a robots.txt file, you’ll just need to connect to your server through an FTP client and then make the changes.

If you are unable to establish the connection, you can contact your web host. However, if you need to create a new robots.txt file, you can do so from a plain text editor.

In this case, be sure to delete the old one if you already have one on your site. Be careful not to use a text editor like Word, it may introduce other code to your text.

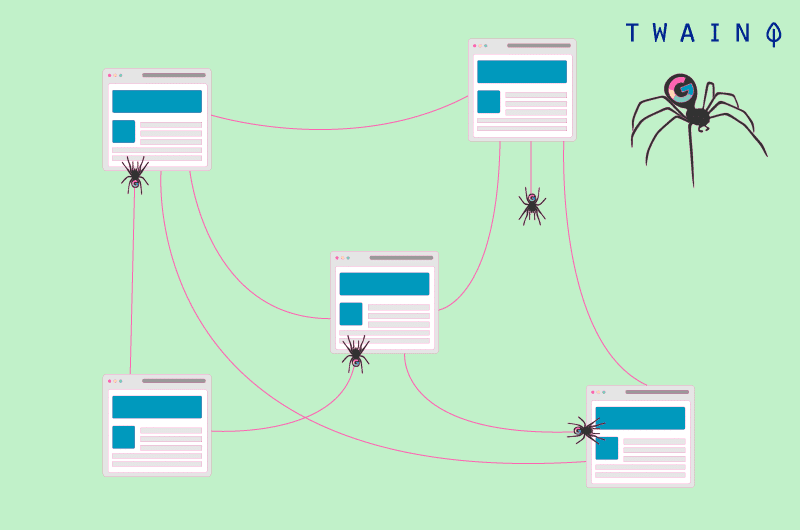

3.2) What is crawling?

Still called crawling, web page crawling is a process by which search engines send robots (spiders) to examine the content of each URL.

These robots move from page to page to find new URLs or new content. It is this first step that allows search engines to discover your content and schedule it for indexing.

The robot file intervenes at this stage and guides the bots according to your preferences. Crawling is distinct from indexing, as the latter consists of storing and organizing the content found during crawling.

3.3. What pages can be blocked with the Disallow directive?

Search engines do not have a universal rule about which pages to block. The use of the Disallow directive is therefore specific to each site and depends on the judgment of its webmaster.

However, this directive can help to tell spiders not to explore the pages under test. You can also prohibit access to certain pages such as those of thanks.

When you are working on a multilingual site for example, you can block the English version if it is not ready and prevent the spiders from exploring it.

As far as the thank you page is concerned, it is generally intended for new prospects. These pages can still spend exploration budgets and appear in search results.

When it appears in the search results, the thank you page is available to anyone and they can access it without going through the lead capture process.

But by blocking the thank you page, you ensure that qualified prospects can access it. In this case, the Disallow directive alone will not be enough since it does not prevent the URL in question from being indexed.

That said, the page in question can still appear on the SERPs. It is therefore recommended to combine the DIsallow directive with the Noindex directive. This way, crawlers will not be able to visit or index the page.

Conclusion

All in all, there are many actions to improve the ranking of a site. The Disallow directive is one of these actions that give you a real advantage in terms of SEO.

It allows you to prohibit search engine spiders from exploring content that is not useful in order to optimize the exploration budget of a website.

In this way, it allows the spiders to use this budget in the best way to increase the visibility of your content in search results.

The use of the Disallow directive can therefore have a significant impact when the instructions are well given. However, it can break an entire site when the instructions are wrong.