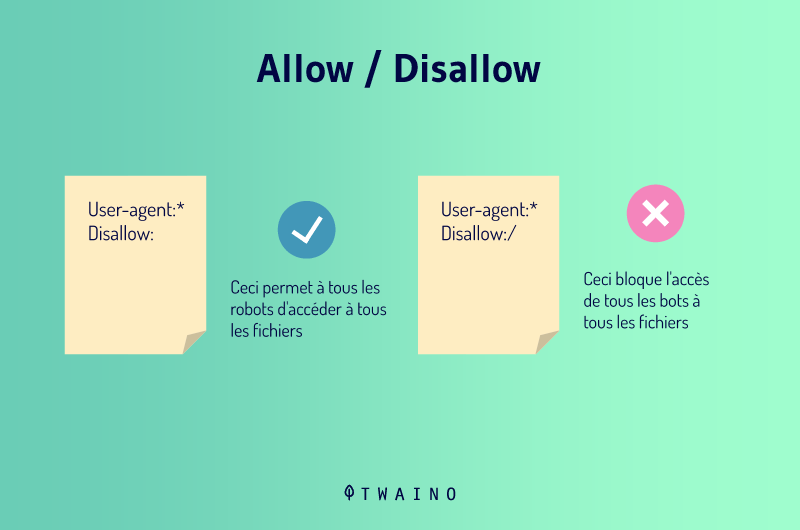

In SEO, Allow is a directive that allows to manage the crawlers sent by search engines such as Google and Bing. Its main function is to tell these bots that they have access to particular URLs, sections or files of the website. On the other hand, we have the Disallow which prohibits access to these same elements deemed sensitive.

The creation of content and the animation of the website are only part of the efforts to be made in the SEO. Several other elements come into play when it comes to making the pages of a website appear among the first of the SERP (Search Engine Result Page)

Indeed, websites have for example a robots.txt file containing directives that allow you to manage the actions of crawlers on the pages of your website. Among these directives, we have Allow.

So :

- What does this technical term mean?

- Where to find it?

- How to use it and what are its benefits for SEO?

Continue reading this article to get clear and precise answers to these questions.

[knock]

Chapter 1: What is the Allow directive and what is its use in SEO?

In this chapter, I will try to:

- To go a little deeper into the definition of the term Allow

- To demonstrate where it is located on a web page;

- And to give its importance in the referencing of a web page.

1.1) What is the Allow directive?

The Allow directive is an instruction contained in a robots.txt file that allows you to specify and indicate to Crawlers (crawlers) of search engines which pages of a website to visit and index

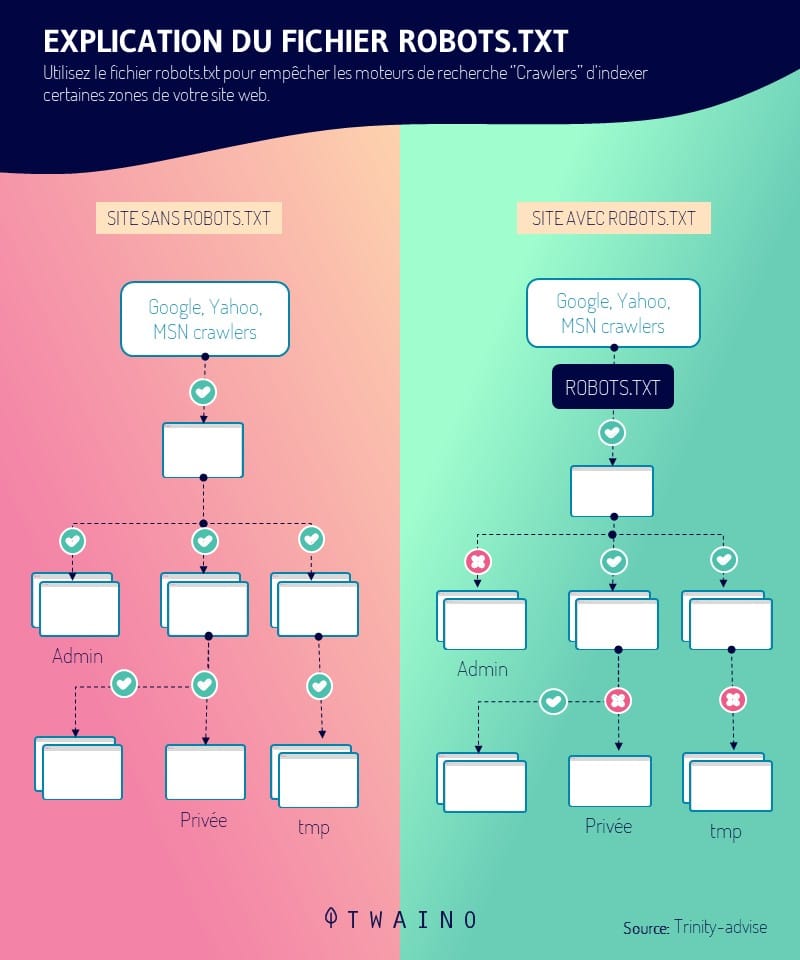

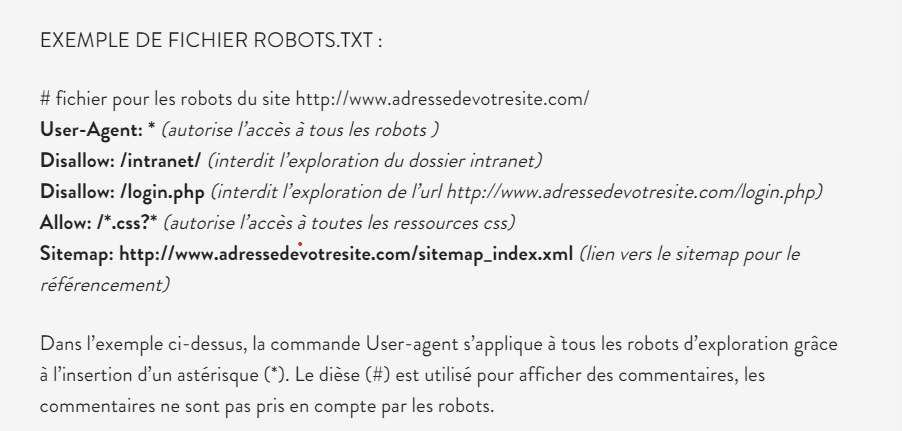

As a reminder, the robots.txt file file is a text file containing information for crawlers. It contains several directives (Allow, Disallow, Sitemap, etc.) each with one or more specificities

The language or code used in this robots.txt file is only understood by search engines such as Google, Bing..

Its mission is to authorize the indexing or not of the pages of a website or an online store during the crawl

As opposed to the Disallow directive which allows toprevent the search engine robots from crawling a web page or the entire directory, Allow tells the spiders which parts of the site to crawl.

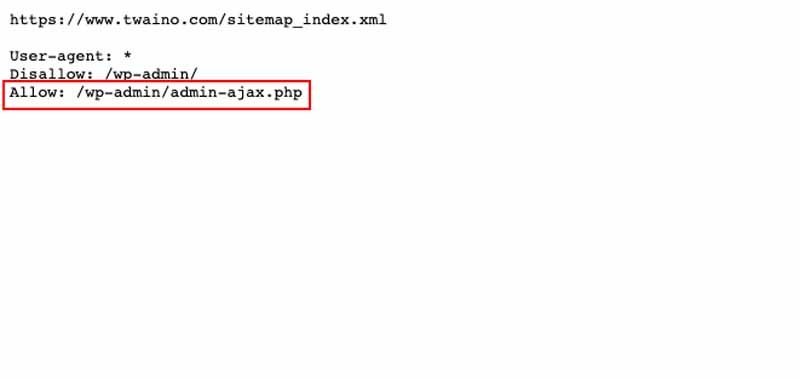

1.2) Where is the Allow directive located?

Allow is included in a robots.txt file that is located in the root directory and URL of websites. This file contains several other directives such as :

- Disallow ;

- Deny ;

- Order

- Etc

To check the presence of the robots.txt file, just type the following link in the address bar of your browser: http://www.adressedevotresite.com/robots.txt

1.3. What is the use of the Allow directive?

We will see below its usefulness in the management of website and its relationship with SEO:

1.3.1. The importance of the Allow directive in the management of the website

As its name suggests, this directive allows to manage a website. It allows the webmaster to direct the crawlers sent by search engines to specific areas of the server, pages that they can explore during the crawl

This work can be done according to the name of the bot, the IP address or any other characteristics related to each robot and recorded in the environment variables

In summary, the Allow directive allows you to indicate precisely the Crawlers that are allowed to access the web site server and the pages they can explore.

1.3.2. The Allow directive and SEO

First of all, the robots.txt file contributes to the good SEO of the site because of its mission to direct the examination of the different parts of a website through its different directives

With the Allow directive included in this file, the Webmaster has the ability to control the crawlers and tell them which are the best (highly optimized) pages of the website to crawl

He can also prevent unwanted pages from being crawled, but this time with the disallow directive.

The main function of the Allow directive with the Disallow is therefore to manage the path of the robots by indicating the pages with high added value to be indexed. These pages indexed by spiders or crawlers will be displayed in the SERPs

Chapter 2: The application of the Allow directive

By controlling the application of the Allow directive, you can control and specify which spiders should visit your site and which parts of the site should be visited.

The Allow directive is applied based on certain arguments or characteristics specific to web crawlers. It can be applied based on the following:

- The domain name;

- The full or partial IP address;

- A network pair;

- A CIDR network specification.

2.1. The application of Allow based on the domain name

Allow always uses “from“as the first argument

In case you specify the first argument as “Allow from allthat is, ”Allow all”, you give all spiders access to explore your website and access all pages of your site

The only restriction is when the directives Deny and Order directives are configured to prevent certain spiders from accessing the server.

Also, when you set the first argument of the Allow directive with the domain namethen crawlers whose names match the specified string can access the server and crawl the pages of the site.

Example: Allow from twaino.org

Allow from .net example.edu

2.2. The application of Allow based on the IP address

With the configuration based on theiP addresswith the IP address based configuration, access will be given to the crawlers after a double recognition test (DNS lookups) on the spider’s IP address

First, a first reverse lookup will be done to determine the name of the robot associated to the IP address

Then, a second one will be done directly on the robot to check if the name of the robot explorer really corresponds to the original IP address

Thus, the robot will have access to the site server only when its name matches the the specified string

Also, it is necessary that the two reverse searches on the IP address give logical and consistent results.

Example: Allow from 10.1.2.3

Allow from 192.145.1.124.236.128

2.3. The application of Allow based on the environment variable

This third type of argument used by the Allow directive gives access to the exploration of the site’s pages only when it recognizes the existence of information related to the Spider in an information storage mechanism called environment variable

From a practical point of view, when Allow is specified “Allow from env=var-env“it allows access to all browsers that have the environment variable “var-env“.

The site server allows you to specify many environment variables with ease. So you have the ability to use this directive to control access to your site based on headers.

Example:

SetEnvIf User-Agent ^KnockKnock/2\.0 let_me_enter

Order Deny,Allow

Deny from all

Allow from env=let_me_in

Chapter: Other questions asked about the Allow directive

3.1. what is Allow?

Allow is an English term that means Allow. It is part of the directives found in the robots.txt file and tells crawlers what pages they can access. It also defines the types of robots that can access a given page

3.2. Why is allow important for the management and referencing of a website?

Its importance is justified by the fact that it allows you to have total control over the administration of your site. That is to say, you can give orders to the crawlers on the essential pages that they must explore. You can also give access to specific robots.

3.3. What is a robots.txt file?

The robots.txt file file is a text file containing a set of directives that allow you to positively manipulate engine robots on how to crawl and index a web site.

3.4. What is an IP address?

An IP address (with IP standing for Internet Protocol) is a unique number permanently or temporarily assigned to a device connected to a network to identify it.

3.5. What is a web crawler?

A web crawler, also known as a spider, spider or crawler, is a program that is typically used by Google and Bing to automatically crawl and index web pages

These web pages after being put in the index of the search engine can now appear in the results of this search engine.

In summary

The natural referencing of a site is a long term work. There is a range of actions that come into play to improve the referencing of a site and to make its pages appear among the first results of SERP (Search Engine Result Page)

The Allow directive is one of the tools that you must master to control the indexing of your website pages.

I hope this guide has provided clear answers to your doubts about the term Allow. So, don’t hesitate to share it if it helped you.

Thanks and see you soon!